An Introduction to the Stylo Library

What is Stylometry?

Stylometry uses linguistic style to determine who authored some anonymous piece of writing, and it has diverse applications. The authorship of some suicide notes may be questionable. Most forum users have aliases in an attempt to anonymize themselves. And some authors publish their writings under pseudonyms. In these varying cases, stylometry can be used to deanonymize an author.

What is Stylo?

Stylo is a library for the R programming language that is used for conducting stylometric analyses. Stylometry sounds intimidating, but this library makes these linguistic analyses so simple that its users do not have to be computational linguists. Stylo isn’t programmatically intensive and mostly just requires a particular naming convention for files and directories. If users are uncomfortable with writing out functions with varying arguments, they can opt to use a simple GUI.

A Stylometric Analysis of Run the Jewels

Run the Jewels is a group of two rappers, El-P and Killer Mike. In collaborative songs, most assume that each rapper raps the verses that they write. This is an interesting example that can be used to show how the Stylo library works for authorship attribution. Models will be trained on lyrics of each rapper when they worked independently, and will then be fed Run the Jewels lyrics to get an idea of each artist’s creative footprint in a song, and how collaboration might work. This analysis will serve as a concrete example for some of the uses and quirks of Stylo.

Getting the data

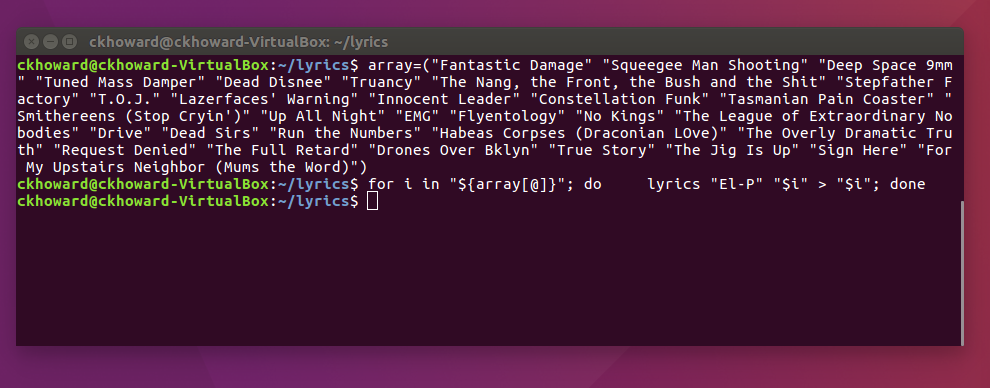

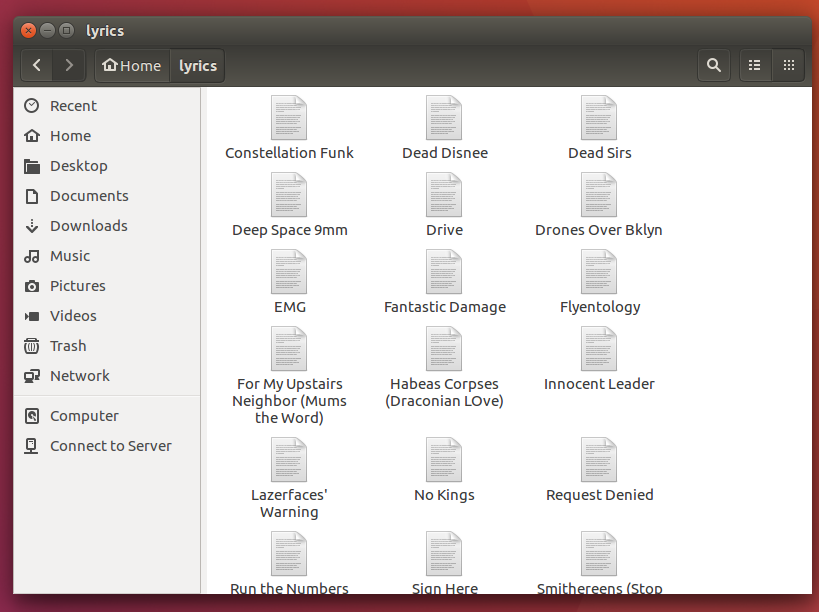

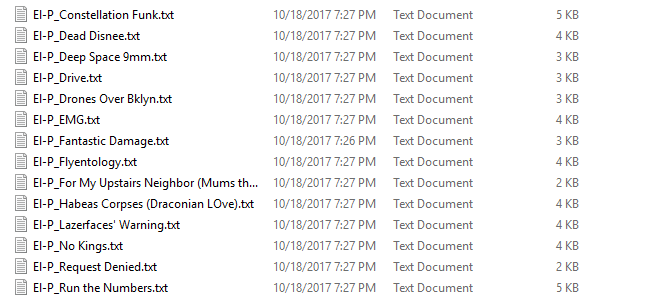

To determine if the project was viable—or that each artist had a distinctive style—I had to get the data. In this case, the data is lyrics that are added to a corpus. Using Enrico Bacis’ LyricWikia API to get lyrics, I use a Bash loop that iterates over an array of hardcoded song names to save the lyrics into the corpus.

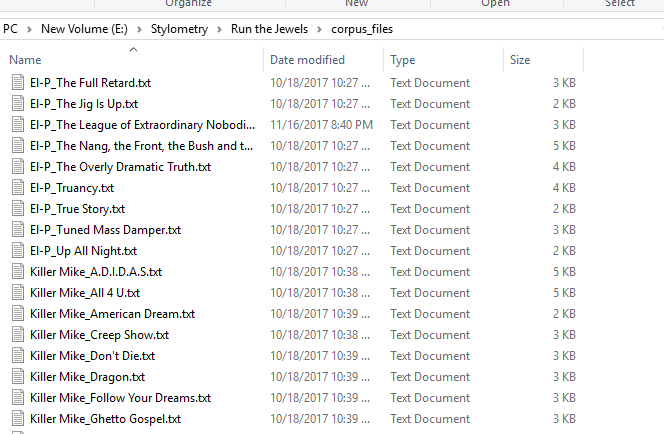

The Stylo R library is heavily reliant on naming conventions, both for directories and files. Everything before an underscore is treated as a class to be analyzed, so if you are looking at texts of George R.R. Martin, you would do something like GeorgeRRMartin_ASongofIceandFire and the software will understand that George R.R. Martin is an author of interest, responsible for that work. I did the same with El-P and Killer Mike, otherwise each author would not have been treated as unique.

Project Viability / Exploratory Analysis

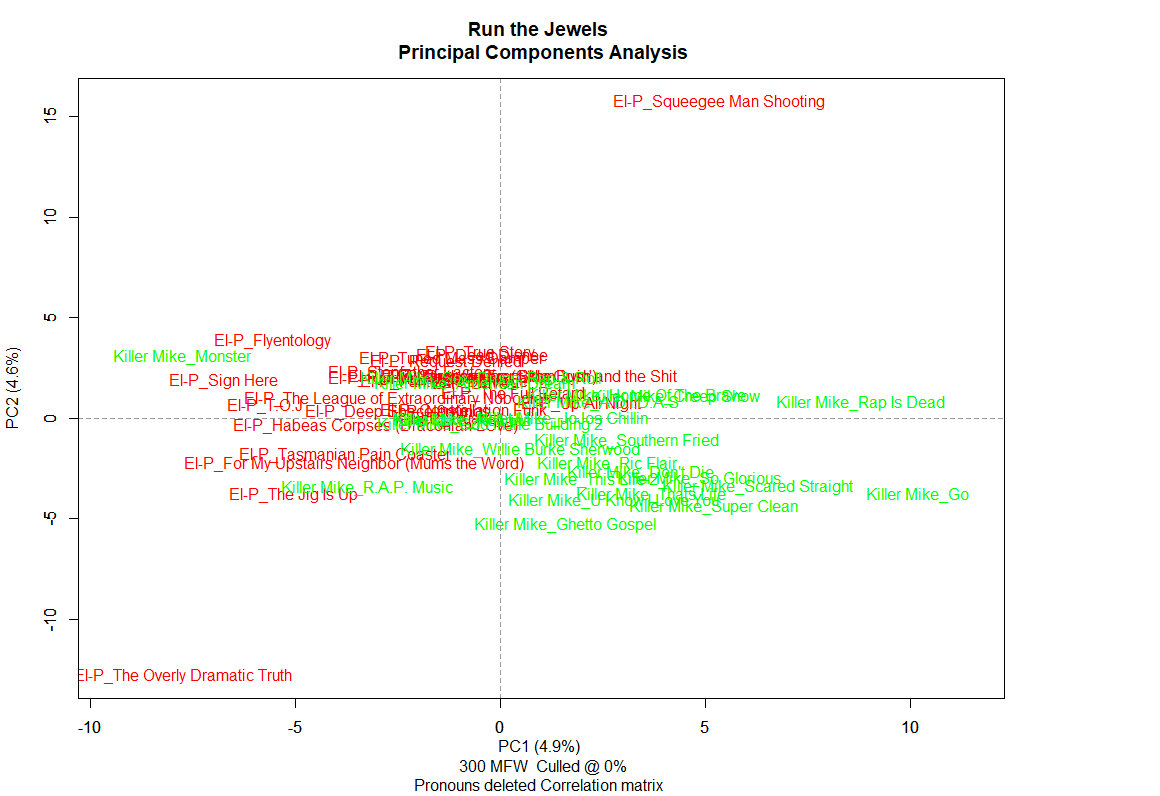

Now that we have the data, we can do a bit of exploration to check whether the project is viable, in this case, whether each rapper has a distinctive style. This can be achieved with a principal components analysis. Since the file naming convention has been altered for Stylo use, the directory naming still has to be altered, and then the function will work when called.

The stylo() function

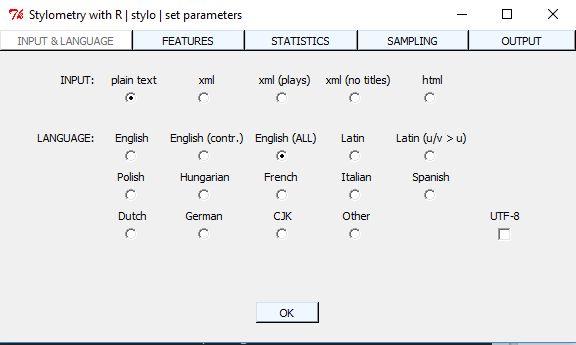

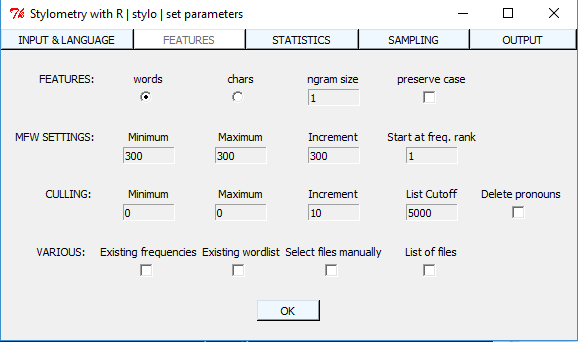

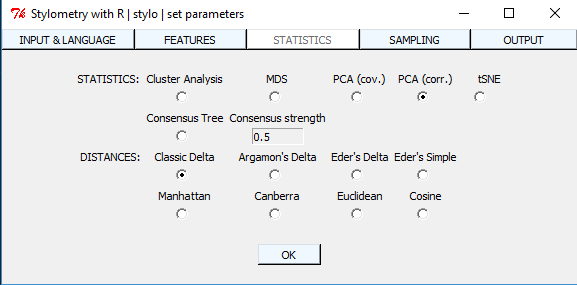

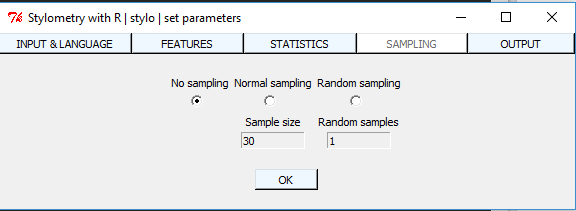

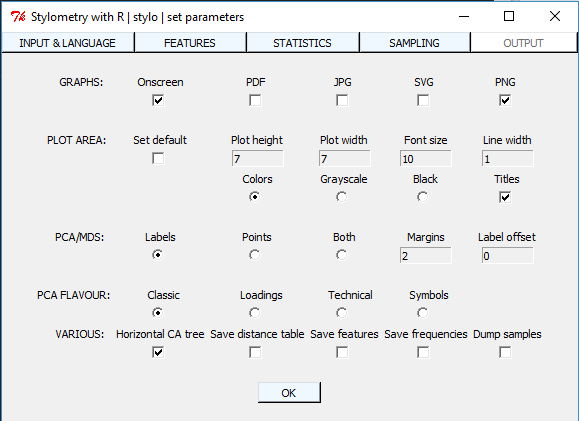

Intuitively enough, one of the most powerful functions in the Stylo library is stylo(). Running this function will load the GUI and the users can then customize the analysis. The GUI that loads appears as follows:

We will go into detail about how the parameters work in an intermediate Stylo post, but for now, only directory information is important. The working directory must be set in R. For this example, it is setwd("E\\Stylometry\\Run the Jewels"). The stylo() function requires a directory called corpus_files if it is being run without explicit arguments (i.e. as stylo()). This corpus_files directory will contain all of the files that you want in your analysis, in this case, all songs by El-P and Killer Mike that I want within the PCA.

After running the function above, with the parameters shown, a PCA is generated. As shown, the library uses the naming convention to color-code each author. El-P is red and Killer Mike is green. It is evident that the rappers differ from each other stylistically when writing music, so the project seems viable. We can proceed to using some of the more interesting tools that Stylo offers.

Quick Recap

All that is needed to generate this PCA is:

- Proper naming of the directory/corpus

- Proper naming of files in the directory/corpus

- A simple one-line function call

Using the SVM Classification Algorithm

Stylo’s classification power is what lures most of its users. With other tools, building a classifier and cleaning the data takes several lines of code that aren’t too straight-forward, increasing the barrier of entry for those that aren’t computationally-inclined. As with the last function, the Stylo library makes this more simple for users, with properly named files and directories, it only takes a single line of code to build and use the classifier.

There are multiple flavors of classification, but the two functions that we will look at are classify() and rolling.classify(). Again, we will get into the technical details in the intermediate post, as this is just a light primer.

The classify() function

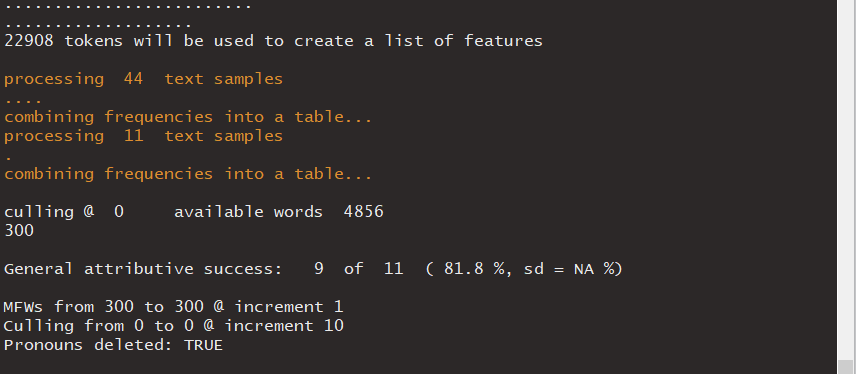

Two subdirectories must be present in the working directory for this function to work; primary_set contains the training data that the classification model will learn from, and secondary_set will work as the test set. For this Run the Jewels example, the primary_set directory contains 80% (44) of the songs in the total corpus (55), and the secondary_set will contain 20% (11) of the songs. Naturally, each rapper is equally sampled for both directories in order to avoid biasing the model.

After running classification <- classify(), a GUI will load allowing me to choose the function’s arguments, just like before. Assigning the function to a variable lets us access different values. As shown, from classification$success.rate, the value of accurately-classified songs is returned, which is 81.8%.

The SVM model learned El-P’s and Killer Mike’s linguistic styles from the songs in the primary_set and applied that learning to the songs in the secondary_set, correctly classifying each of those 11 songs. This accuracy is mostly due to the strength of the samples, each song’s word count is enough to make the sample powerful. If the files in the directory were smaller, like individual verses, the accuracy is reduced.

The rolling.classify() function

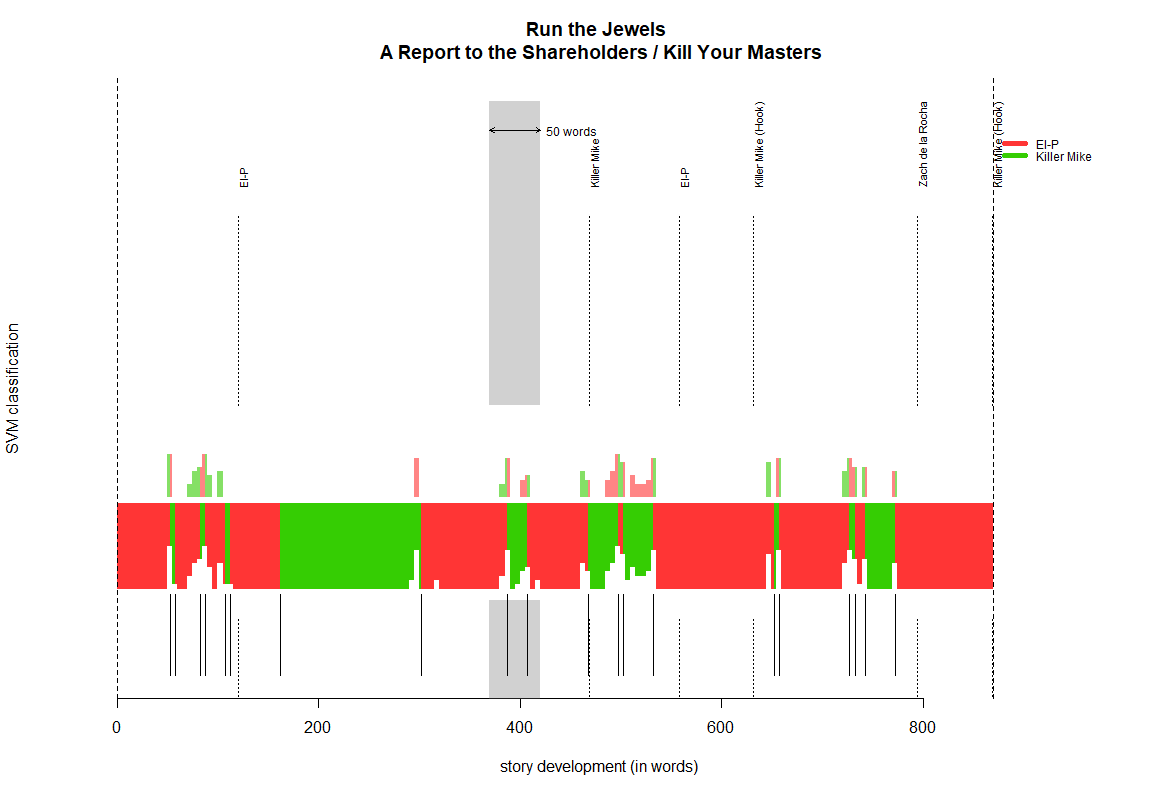

Two subdirectories must also be present in the working directory for this function to work; reference_set, which will contain all of the samples that you want the model to learn from, and test_set, which will contain the questionable or anonymous piece of writing that is of interest. Running the following code generates a visualization of classified samples, color-coded based on the naming convention used.

rolling.info <- rolling.classify(write.png.file = TRUE, classification.method = "svm", mfw = 300, training.set.sampling = "normal.sampling",

slice.size = 50, slice.overlap = 45,

milestone.label=c("El-P", "Killer Mike", "El-P", "Killer Mike", "Zach de la Rocha", "Killer Mike"))

title(main="Run the Jewels \n A Report to the Shareholders / Kill Your Masters")

This song, A Report to the Shareholders / Kill Your Masters, has about 1,000 words in it, as shown by the x-axis. The first 180 words or so are classified as El-P because of the red coloring. This function also has handy labeling functionality, where excerpts can be given vertical labels. For example, if I go into test_set and find the song file that I am interested in, Kill Your Masters, and add the text xmilestone before each of the verses, the argument milestone.label assigns each value to each xmilestone. In the above song, there are six xmilestone entries, and the function’s argument has six corresponding labels. This is useful for comparing classification to some area of interest; in this case, I know which verse belongs to which rapper, so I can manually insert those vertical labels to see if the classifications are “accurate.” The depth of the colored blocks indicate certainty. Where there are full blocks, there is the most certainty, but where there are dips, there is uncertainty.

Results

We can see that some of Killer Mike’s lyrics are classified as being authored by El-P, and that Zach de la Rocha’s verses are also mostly classified as El-P (which makes sense, given the model did not learn de la Rocha’s style). The depth of the colored blocks indicate certainty, so it appears that most uncertainty is around verse transition, which could indicate that the two rappers tend to collaborate in this area, perhaps creating a bridge from one rapper’s verses, to the other’s, so that the song remains fluid. In general, it seems that the model could be biased towards El-P based on the fact that Stylo largely uses MFW (most frequent words).

With a little tweaking of the filenames and directory names, a single function can be used to clean and classify the data. One line of code takes the place of the several lines that would be used for breaking the text down into the desired number of n-grams, the removal of pronouns, the proper format of text, the building of the model, the training and testing of the data, and even the visualizations. If interested in some of the technical details of how the library works, stay posted for the intermediate-level Stylo post.

Sources

Stylo (R Library) Eder, M., Rybicki, J. and Kestemont, M. (2016). Stylometry with R: a package for computational text analysis. “R Journal”, 8(1): 107-121.

Rolling Stylometry Maciej Eder; Rolling stylometry, Digital Scholarship in the Humanities, Volume 31, Issue 3, 1 September 2016, Pages 457–469, https://doi.org/10.1093/llc/fqv010

LyricWikia API (Python) https://github.com/enricobacis/lyricwikia